Difference between revisions of "User manuals"

(→Files comparison) |

(→Quality evaluation) |

||

| Line 364: | Line 364: | ||

and the system will send the quality assessment report to the translator. See translator’s steps in the next chapter. | and the system will send the quality assessment report to the translator. See translator’s steps in the next chapter. | ||

| + | |||

| + | Please note that you may '''<U>[[Evaluation report#Export to Excel|export the evaluation report]]</U>''' with mistakes classification. | ||

[[File:Redirect.jpg|40px|link=User manual]] Back to the '''table of contents'''. | [[File:Redirect.jpg|40px|link=User manual]] Back to the '''table of contents'''. | ||

Revision as of 14:51, 19 April 2018

Contents

About TQAuditor

TQAuditor 2.12 is the system that evaluates and monitors translation quality and allows you to:

- compare unedited translation made by a translator with edited version received from an editor;

- generate a report about editor’s corrections;

- classify each correction by mistake type and severity, thus allowing to get the translation quality evaluation score with a maximum 100 points;

- ensure anonymous communication between a translator and an editor regarding corrections and mistakes classification;

- automate a process of maintaining the evaluation project;

- save all evaluated translations in the database and create the translation quality reports at the company:

you can create the list of top translators with the highest score, see dynamics of quality per individual translator by month, etc.

Start without registration

You can compare two versions of translated files in the system even without registering an account.

Go to https://tqauditor.com and use "Compare files":

It will open the Quick compare page:

- Translated files - add here unedited bilingual files using the "Browse" button.

- Reviewed files - add here edited bilingual files using the "Browse" button.

TQAuditor 2.12 accepts bilingual files of different formats (Helium, XLF, XLZ, SDLXliff, TTX, TMX etc.). Click "Supported bilingual file types" to see all the file formats TQA work with.

Press "Upload selected files".

It will open the page with the Comparison report and additional options:

Let’s review them in details.

![]() Back to the table of contents.

Back to the table of contents.

Comparison report deletion

Press Delete comparison report if current information is no longer needed:

Here you may also upload other files for a new comparison report by pressing Upload files.

Export report to Excel

You may even export the report to Excel.

Click Export to Excel:

And you will have the fixes in columns for comparing:

Markup display

With Markup display option, you may choose tags display.

- Full - tags have original length, so you can see the data within:

- Short - tags are compressed and you see only their position in the text:

- None – tags are totally hidden, so they will not distract you:

Press the "Apply" button after changing the preferences:

![]() Back to the table of contents.

Back to the table of contents.

Units display

- All units - shows all text segments:

- With corrections - shows nothing but amended:

Press the "Apply" button after changing the preferences:

That’s it. To discover the other features of TQAuditor, you need to register.

Please see the next chapters.

![]() Back to the table of contents.

Back to the table of contents.

Account registration

You’ll need to register an account to benefit from the full functionality of the system, e.g. comparing many file pairs at once, classify mistakes, get the quality score of the translation,

enable discussion between the translator and editor, and see the detailed reports of everything that happens with translation quality in your company.

So let’s start the registration…

1. Go to https://tqauditor.com and press "Sign Up":

2. Choose the type of account (Light account or Enterprise account):

Light account includes comparison options:

- Unlimited quantity of file pairs per comparison report

- Uploading files in ZIP archives

- Export results to Excel

Enterprise account in addition to comparison options includes evaluation options and statistics:

- Unlimited quantity of file pairs per comparison report

- Uploading files in ZIP archives

- Export results to Excel

- Evaluation

- Quality standard customization

- Reports and statistics

3. Fill in all the fields with required information and select "Submit".

Now you have an account. We need to add your translators, managers and evaluators in the system. Please see the next chapter.

![]() Back to the table of contents.

Back to the table of contents.

Adding users

You can add users manually, one by one, or import their list from an Excel file. Each of these options is described below.

Adding users manually

To add a new user, go to Users => New user:

It will open the New user creation page.

Fill in all the fields and press the "Create" button:

The system will send a confirmation e-mail to just created user.

And you will see, that user appeared in the Users list.

You may also Edit user details or Resend invitation (for this, click the necessary user ID):

For more info, please see the User details page.

The user must confirm registration. The date and time of registration will be shown in the Registered at column:

Note: There are 4 types of users with different roles in the system:

- Translator

- Evaluator

- Manager

- Administrator

For more detailed information on System roles, please see the System roles section.

Import users from Excel

If you already have the list of users with their contact info, you can easily import it without the need to enter such information manually.

To do so, go to Users=>Import from Excel:

For more detailed instructions on users import, please see the Import users from Excel page.

Now, when all users are added, we may start working with projects. Please see the next chapter.

![]() Back to the table of contents.

Back to the table of contents.

Project creation

Creating a translation quality evaluation project takes a minute or two.

The manager has just to appoint the project evaluator, project translator, project arbiter, enter some basic project info, and let the system take care of the project.

To start a new project, go to Projects=>New project:

Fill in the blank and press the "Create" button:

- TMS translation job code - project code, entered by manager.

- TMS review job code - project code, entered by manager (differs from translation job code).

- Source language - language being translated from.

- Target language - language being translated to.

- Specialization - select a translation specialization (first, it should be created: System => Specialization => New specialization).

- CAT word count - the weighted word count of the job.

- Project translator - user that performs translations (may be assigned to everyone).

- Project evaluator - user that evaluates translations (may be assigned to everyone, excluding translator).

- Project arbiter - user that becomes a judge between translator and evaluator in controversial situations.

The arbiter’s decision about evaluation is final (may be assigned to everyone, excluding translator).

- Note for evaluator- manager may leave additional information for the project evaluator here.

Note 1: Manager may assign himself as Project arbiter, Project evaluator or Project translator.

Note 2: The system role for Arbiter may be Evaluator, Manager or Administrator.

The new project will appear in the list.

To see project details, select the ID number:

It will open the Project details page.

Manager can control the project: edit & download project files, reassign participants (manager, translator, evaluator or arbiter) or delete this project at all:

Note: Evaluator (not manager) uploads the files. Manager may only download them, if needed:

Now, the project has been created, and manager can forget about it. The system sends an email notification to the project evaluator,

who has to follow the instructions from the next chapter.

![]() Back to the table of contents.

Back to the table of contents.

Files comparison

After receiving an evaluation request from the system, the project evaluator has to compare edited files with unedited ones.

First, the evaluator uploads them:

Then, he has to choose files to compare and click "Upload selected files":

Files uploaded:

The evaluator may select several files by using "Browse" buttons.

After uploading, please click "Create comparison report":

The сomparison report has the word count for each segment, useful statistics and filters.

You may even export the report to Excel.

Let’s continue - press "Start evaluation":

You may configure evaluation process:

- Skip locked units - hide "frozen" units (for example, the client wants some parts, extremely important for him, stayed unchanged. Besides, extra units slow down editor’s work).

- Skip segments with match >= - fuzzy match percentage. The program will hide segments with match greater than or equal to that you specified.

- Evaluation sample word count limit - the number of words in edited segments, chosen for evaluation.

Press "Start evaluation":

The evaluation started.

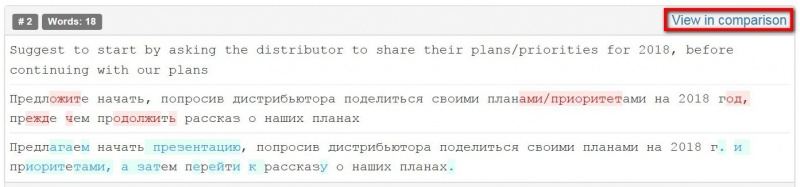

For convenience, you may view every segment in the comparison report - use "View in comparison":

When you have evaluated all segments press "Complete evaluation":

Describe the translation in general or give advice to the translator and press the "Complete" button:

After generating the comparison report, the system sends the notification to the translator, and the translator may see all the corrections done in his deliveries.

But this is just the beginning - the project evaluator may start quality assessment process. For more info, please see the next chapter.

![]() Back to the table of contents.

Back to the table of contents.

Quality evaluation

In this step, the project evaluator has to select the sample for the quality assessment and classify every correction by type and severity.

Select "Add mistake":

Add information about the mistake and click "Submit":

You can also edit, delete mistake/comment:

You may add another mistake by pressing "Add mistake":

When the mistakes classification is done, the project evaluator has to press "Complete evaluation" => "Complete",

and the system will send the quality assessment report to the translator. See translator’s steps in the next chapter.

Please note that you may export the evaluation report with mistakes classification.

![]() Back to the table of contents.

Back to the table of contents.

Discussion of mistakes

When the project evaluator finishes assessing the translation quality, the project translator gets an email notification.

Translator reviews the quality feedback

After the translator has received the email with the translation quality evaluation, the translator should do the following actions:

1. View the Comparison report. Look through all the corrections made by the evaluator.

2. Go to the Evaluation report.

3. Look through the classification of each mistake.

4. If you agree with the classification of all mistakes, press "Complete project". The project and its evaluation score are finalized at this stage:

If you do not agree with the classification of some mistakes, do the following actions:

5. Press "Add comment" in the box of the mistake that you do not agree with and enter it by clicking "Submit":

6. When you have entered all the comments, send the project for reevaluation by pressing "Request reevaluation":

7. The project will be sent to the evaluator, who will review your comments. If they are convincing, the evaluator will change the mistake

severity in your favour. You will receive the reevaluated project. You are able to send this project for reevaluation one more time.

8. If you have not reached an agreement with the evaluator, you can send the project to the arbiter by pressing "Request arbitration" (it appears instead of "Request reevaluation"):

9. The arbiter will provide a final score that cannot be disputed.

Evaluator reviews the translator's comments

At this stage, the evaluator needs to review all the translator’s comments with objections. The evaluator has the following instructions:

1. If the translator is right, change the mistake’s severity and enter your comment why it has been changed.

If the translator is wrong, enter your comment why the mistake's severity has not been changed.

2. To finish, press "Complete evaluation"=>"Complete". The project will be sent to the translator for review.

Arbiter reviews the project

Unless the system has been set up otherwise, the translator can return the project to the evaluator for 3 times.

If the translator and the evaluator have not managed to reach the agreement after 3 attempts, the translator sends the project to the arbiter.

The user, who was assigned to be the arbiter will be notified by the system.

The arbiter has to assign a final score on the disputed matters. Look through all the rows where the translator and evaluator disagree.

If the translator is right, change the mistake’s severity and enter your comment why it has been changed.

If the translator is wrong, enter your comment why the mistake severity has not been changed.

Finally, the arbiter should press "Complete project". The project will be finalized and all its participants will receive the respective message.

![]() Back to the table of contents.

Back to the table of contents.

Projects filters

For convenience's sake, you may apply different project filters:

For more details, please see the Additional filters section.

You may also order projects by particular criteria: click the title of any column

and all the projects will line up (the arrow ![]() button appears):

button appears):

Note: The column headers which enable this sorting feature are highlighted in blue.

For more details, please see the Projects list page.

![]() Back to the table of contents.

Back to the table of contents.

Statistics and reports

All the data on translation quality assessment are piled up in the system, and after some period you’ll be able to generate the reports.

E.g. you can see the best-scoring translators or view the detailed report on each translator: how his quality was changing over time,

see what typical mistake the translator is making, in what specializations he scores better etc.

You can access reports by pressing Reports in the upper section of the screen:

- Users with the Translator role can access only their individual reports on their translations quality:

- Users with the Evaluator role can access their individual reports on their translations quality and their evaluations reports:

- Users with the Manager and Administrator roles can access all the available reports:

Average score reports

To view the Average score reports, go to Reports=>Average score and select the necessary report:

Here you may see the following reports:

- Average score per translator - shows the average score per translator.

- Average score per evaluator - this report shows the average score per evaluator.

- Average score per manager - shows the average score per manager.

- Average score per specialization - shows the average score per specialization.

Translator report

To view the Translator report , go to Reports=>Translator report:

On this page, you will find information about every translator.

For more details, please see the Translator report page.

Evaluator report

To view the Evaluator report , go to Reports=>Evaluator report:

On this page, you will find information about every evaluator.

For more details, please see the Evaluator report page.

![]() Back to the table of contents.

Back to the table of contents.

System settings

You can change and set system values in the System menu:

Each of these menu screens is described below.

Quality standards

By default, the system has pre-defined quality standards, i.e. types of mistakes, penalty scores etc.,

but you can change them to define your own corporate quality standards. To do so, go to System=>Quality standard:

For more detailed information, please see the Quality standard page.

Mistake severities

Mistake severity is the gravity of mistake.

Go to System=>Mistake severities:

This menu screen contains two submenus. Each of them is described below.

- Mistake severities list - here you may view the list of default mistake severities proposed by the system:

Note: You can’t delete mistake severities connected with projects. Just select the unneeded mistake severity by pressing "Edit"

and uncheck the "Enabled" box. It will not appear in the drop-down list anymore.

- New mistake severity - here you may add a new mistake severity:

For more details, please see the Mistake severities list and New mistake severity pages.

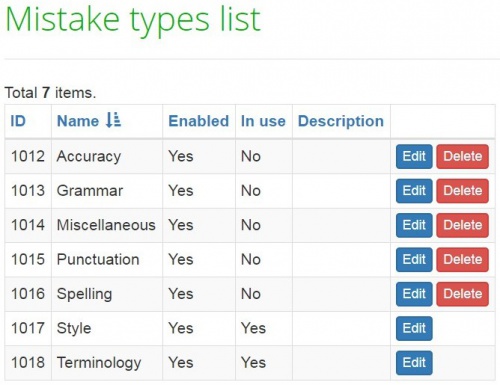

Mistake types

Mistake type is the kind of mistake. For example, Grammar, Punctuation, etc.

Go to System=>Mistake types:

This menu screen contains two submenus. Each of them is described below.

- Mistake types list - here you may view the list of default mistake types proposed by the system:

Note: You can’t delete mistake types connected with projects. Just select the unneeded mistake by pressing "Edit"

and uncheck the "Enabled" box. It will not appear in the drop-down list anymore.

- New mistake type - here you may add a new mistake type:

For more details, please see the Mistake types list and New mistake type pages.

Specializations

Specialization is a particular field that translation is focused on (an object of translation).

Go to System=>Specializations:

This menu screen contains two submenus. Each of them is described below.

- Specializations list - here you may view the list of default specializations proposed by the system:

Note: You can’t delete specializations connected with projects. Just select the unneeded specialization by pressing "Edit"

and uncheck the "Enabled" box. It will not appear in the drop-down list anymore.

- New specialization - here you may add a new specialization:

For more details, please see the Specializations list and New specialization pages.

Edit quality levels

Go to System=>Edit quality levels:

Here you may see the list of default quality levels proposed by the system:

Note: You can’t remove quality levels connected with projects.

But you may add a new one (click "Add below") or edit current quality levels:

Evaluation settings

Go to System=>Evaluation settings:

On the Evaluation settings page you may define maximum evaluation attempts and default evaluation sample word count limit:

- Maximum evaluation attempts - here you may define, how many times translator may argue in discussion with evaluator. By default, translator may leave 3 comments. 2 times replies evaluator,

but on the 3-rd time, arbiter replies and complete this discussion.

- Default evaluation sample word count limit- here you may define the number of words for evaluation (the system offers 1000 words by default).

Reminders

Go to System=>Reminders:

The system can remind about projects or automatically close them when you need it.

As you may see, the system is quite flexible in terms of settings, and you may configure the system to work a bit differently than it does by default.

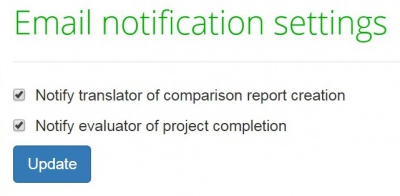

Notifications

Go to System=>Notifications:

Here you can configure whether the system should send notifications of comparison reports creation to translators, and of projects completion to evaluators:

Enable or disable the corresponding notification, and press "Update" to save changes.

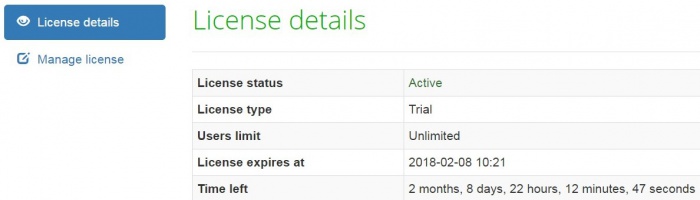

License

Go to System=>License:

On this page, you may see your license details:

By pressing "Manage license" you can manage your license.

For more details, please see the Licensing page.

It seems that’s all you need for productive work in TQAuditor.

If any questions arise, please contact us.

Good luck!