Difference between revisions of "Evaluation report"

(→Start evaluation (manual word count)) |

(→Units display) |

||

| (90 intermediate revisions by 4 users not shown) | |||

| Line 5: | Line 5: | ||

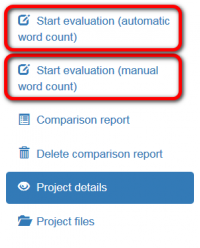

The evaluator can start the evaluation whether with automatic word count or enter it manually when starting the process: | The evaluator can start the evaluation whether with automatic word count or enter it manually when starting the process: | ||

| − | [[File:Evaluations.png|border| | + | [[File:Evaluations.png|border|200px]] |

For more info on both methods, please check the relevant sections below. | For more info on both methods, please check the relevant sections below. | ||

| − | ==''' | + | =='''Automatic vs. manual word count'''== |

| + | |||

| + | '''Automatic:''' | ||

| − | + | 1. Used for fully reviewed files. | |

| − | + | 2. The "Evaluation sample word count limit" is used to adjust how many segments for evaluation will be displayed. | |

| − | + | 3. The system will display only corrected segments (selected randomly) with the total word count specified as "Evaluation sample word count limit". | |

| − | + | ''For example'', if 1000 was specified as "Evaluation sample word count limit", the system will display around 100 segments with around 1000 words in total. | |

| − | + | ::''Please note that the number of segments varies depending on the size of segments''. | |

| − | + | ::<span style="color:orange">'''Note'''</span>: If the evaluator specifies 1000 as "Evaluation sample word count limit" while there are only 500 words in all corrected segments (let's say, there are 900 words in the file), the system will still display corrected segments with around 500 words in total. It means that ''1000 can be safely used as "Evaluation sample word count limit" even if the real total word count is lower''. | |

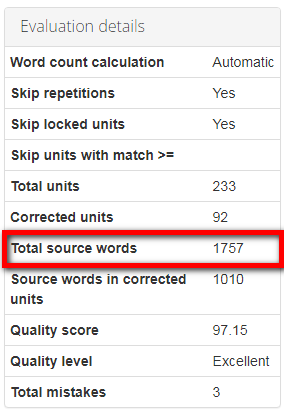

| − | + | 4. When calculating the score, the "Total source words" from the "Evaluation details" section (not the Total source words of a file) is used: | |

| − | + | [[file:TSW.png|border|300 px]] | |

| − | + | ''For example'', if the evaluation report includes corrected segments with around 1000 words and the total source words is 1757, 1757 will be used in the formula. | |

| − | + | '''Manual:''' | |

| − | + | 1. Used for partially reviewed files (in order not to split the file into parts and import only the reviewed part). | |

| − | + | 2. The "Evaluated source words" should reflect the total number of words in the reviewed part of the file. | |

| − | Then | + | ''For example'', a reviewer reviewed only 1500 words in a 5000-word file. Then they should specify 1500 as "Evaluated source words" and the system will not take the remaining 3500 words into account. |

| − | + | 3. The system will display all the corrected segments. So, if the reviewed part of the file is large, the evaluator will have to evaluate way more segments. | |

| − | + | 4. When calculating the score, the "Total source words" is used. In this case, "Evaluated source words" = "Total source words". | |

| − | + | =='''Start evaluation (automatic word count)'''== | |

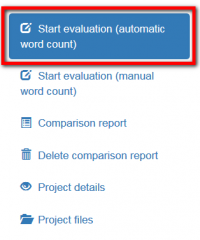

| − | + | If you select this option, the system will display randomly selected segments containing only corrected units for evaluation: | |

| − | + | [[File:Start evaluation automatic.png|border|200px]] | |

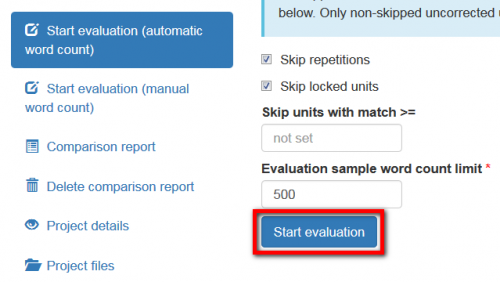

| − | + | Then you may configure the evaluation process: | |

| − | + | *"Skip repetitions" — the system will hide repeated segments (only one of them will be displayed) | |

| − | + | *"Skip locked units" — the "frozen" units will not be displayed (for example, this setting is used if a client wants some important parts of the translated text to stay unchanged). | |

| − | = | + | *"Skip units with match >=" — units with matches greater than or equal to a specified number will not be displayed. |

| − | + | *"Evaluation sample word count limit" — this value is used to adjust how many segments for evaluation will be displayed. | |

| − | [[File: | + | [[File:Start automatic evaluation settings final.png|border|500px]] |

| − | |||

| − | |||

| − | + | Adjust the settings and click "Start evaluation". | |

| − | |||

| − | |||

| − | + | =='''Start evaluation (manual word count)'''== | |

| − | |||

| − | |||

| − | + | If the file was reviewed partially, you can use the evaluation with manual word count. | |

| − | |||

| − | |||

| − | + | To do this, click '''"Start evaluation (manual word count)"''': | |

| − | + | [[File:Start manual evaluation.png|border|200px]] | |

| − | + | Enter the number of evaluated source words (total number of words in the reviewed part of the file): | |

| − | + | [[File:Start manual evaluation settings 3.png|border|450px]] | |

| − | + | Then click "Start evaluation" and the system will display all corrected segments of the document. | |

| − | + | =='''Mistakes'''== | |

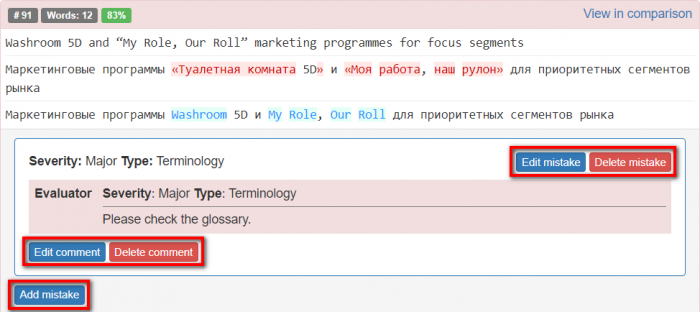

| − | + | Click the "Add mistake" button within a needed segment to add a mistake: | |

| − | + | [[File:1. 91.png|border|700px]] | |

| − | + | Specify mistake type and severity, leave a comment if needed, and click "Submit": | |

| − | [[ | + | [[File:2. mistake.png|border|290px]] |

| + | |||

| + | You can edit, delete mistakes or comments, and add mistakes by clicking the corresponding buttons: | ||

| − | *''' | + | [[File:3. mistal.png|border|700px]] |

| + | |||

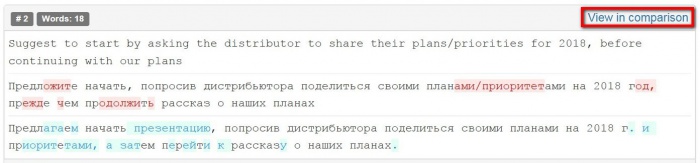

| + | *'''View in comparison''' — this link redirects you to the page with the '''<u>[[Comparison report|comparison report]]</u>''': | ||

| − | + | [[File:View in comparison.jpg|border|700px]] | |

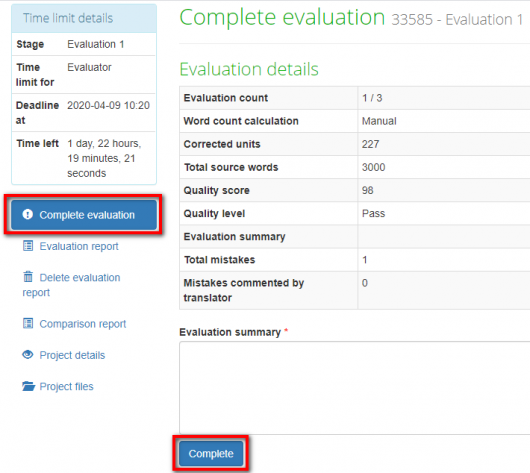

| − | + | When all the mistakes are added and classified, click "Complete evaluation", write an evaluation summary, and click the "Complete" button. The translator will get a notification. | |

| − | + | [[File:1 complete evaluation.png|border|530px]] | |

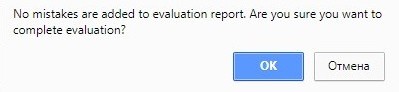

| − | + | ::<span style="color:orange"> '''Note:'''</span> If you press '''"Complete"''' and no mistakes are added to the report, the system will warn you: | |

| − | + | [[file:Evaluation no mistake are added.jpg|border|400px]] | |

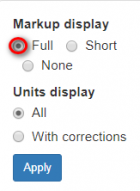

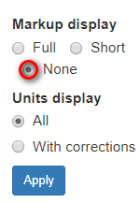

==='''Markup display'''=== | ==='''Markup display'''=== | ||

| − | + | Markup display settings allow you to choose how tags will be displayed: | |

| − | * | + | *"Full" — tags have original length, so you can see the data within: |

| − | [[ | + | [[File:1 full.png|border|140px]] [[File:1.png|border|650px]] |

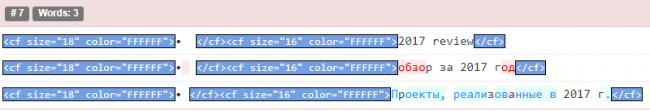

| − | * | + | *"Short" — the contents of the tags are not displayed and you see only their position in the text: |

| − | [[ | + | [[File:2 short.png|border|140px]] [[File:2.png|border|350px]] |

| − | * | + | *"None" — tags are not displayed: |

| − | [[ | + | [[File:3 none.png|border|140px]] [[File:3.png|border|270px]] |

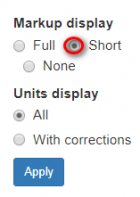

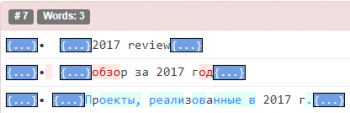

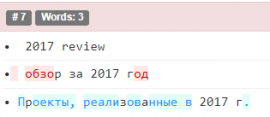

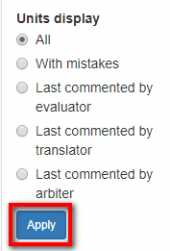

==='''Units display'''=== | ==='''Units display'''=== | ||

| − | * | + | *"All" - units with and without mistakes are displayed: |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | *"With mistakes" - only units with mistakes are displayed: | |

| − | * | + | *"Last commented by evaluator" — only units with the last comment left by the evaluator are displayed. |

| − | * | + | *"Last commented by translator" — only units with the last comment left by the translater are displayed. |

| − | * | + | *"Last commented by arbiter" — only units with the last comment left by the arbiter are displayed. |

| − | + | [[File:Units display.png|border|170px]] | |

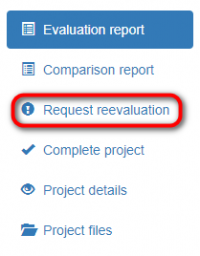

=='''Reevaluation and arbitration requests'''== | =='''Reevaluation and arbitration requests'''== | ||

| − | When | + | When the evaluation is done, the translator can complete the project or request the reevaluation if they disagree with mistake severities: |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[File:Request reevaluation.png|border|200px]] | |

| − | If | + | If the translator requests the reevaluation, the evaluator will have to reply to all the translator's comments. |

| − | + | ::<span style="color:orange">'''Note:'''</span> Unless the number of [https://wiki.tqauditor.com/wiki/Evaluation_settings maximum evaluation attempts] has been adjusted, the translator can request the reevaluation for 2 times. | |

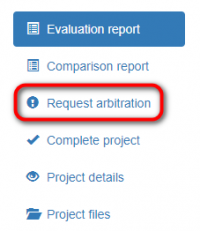

| − | If | + | If there is no agreement between the translator and evaluator, the translator can request the arbitration: |

| − | + | [[File:Arb.png|border|200px]] | |

| − | + | The arbiter provides a final score that cannot be disputed and completes the project. Once the arbitration is completed, all the project participants will receive an email notification. | |

| − | [[File:Redirect.jpg|40px|link=Quality evaluation]] Back to the | + | [[File:Redirect.jpg|40px|link=Quality evaluation]] Back to the table of contents. |

Latest revision as of 18:52, 18 February 2022

Contents

General information

After the evaluator uploaded files, they can start the evaluation.

The evaluator can start the evaluation whether with automatic word count or enter it manually when starting the process:

For more info on both methods, please check the relevant sections below.

Automatic vs. manual word count

Automatic:

1. Used for fully reviewed files.

2. The "Evaluation sample word count limit" is used to adjust how many segments for evaluation will be displayed.

3. The system will display only corrected segments (selected randomly) with the total word count specified as "Evaluation sample word count limit".

For example, if 1000 was specified as "Evaluation sample word count limit", the system will display around 100 segments with around 1000 words in total.

- Please note that the number of segments varies depending on the size of segments.

- Note: If the evaluator specifies 1000 as "Evaluation sample word count limit" while there are only 500 words in all corrected segments (let's say, there are 900 words in the file), the system will still display corrected segments with around 500 words in total. It means that 1000 can be safely used as "Evaluation sample word count limit" even if the real total word count is lower.

4. When calculating the score, the "Total source words" from the "Evaluation details" section (not the Total source words of a file) is used:

For example, if the evaluation report includes corrected segments with around 1000 words and the total source words is 1757, 1757 will be used in the formula.

Manual:

1. Used for partially reviewed files (in order not to split the file into parts and import only the reviewed part).

2. The "Evaluated source words" should reflect the total number of words in the reviewed part of the file.

For example, a reviewer reviewed only 1500 words in a 5000-word file. Then they should specify 1500 as "Evaluated source words" and the system will not take the remaining 3500 words into account.

3. The system will display all the corrected segments. So, if the reviewed part of the file is large, the evaluator will have to evaluate way more segments.

4. When calculating the score, the "Total source words" is used. In this case, "Evaluated source words" = "Total source words".

Start evaluation (automatic word count)

If you select this option, the system will display randomly selected segments containing only corrected units for evaluation:

Then you may configure the evaluation process:

- "Skip repetitions" — the system will hide repeated segments (only one of them will be displayed)

- "Skip locked units" — the "frozen" units will not be displayed (for example, this setting is used if a client wants some important parts of the translated text to stay unchanged).

- "Skip units with match >=" — units with matches greater than or equal to a specified number will not be displayed.

- "Evaluation sample word count limit" — this value is used to adjust how many segments for evaluation will be displayed.

Adjust the settings and click "Start evaluation".

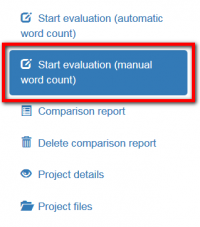

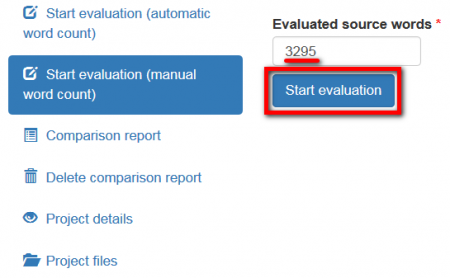

Start evaluation (manual word count)

If the file was reviewed partially, you can use the evaluation with manual word count.

To do this, click "Start evaluation (manual word count)":

Enter the number of evaluated source words (total number of words in the reviewed part of the file):

Then click "Start evaluation" and the system will display all corrected segments of the document.

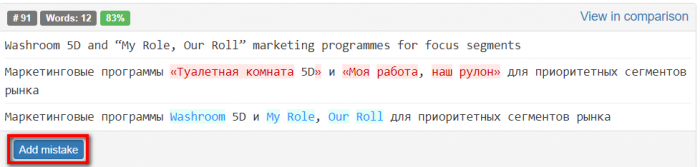

Mistakes

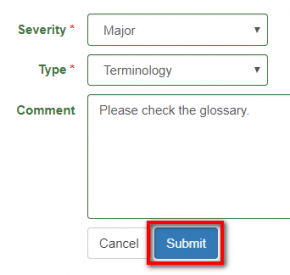

Click the "Add mistake" button within a needed segment to add a mistake:

Specify mistake type and severity, leave a comment if needed, and click "Submit":

You can edit, delete mistakes or comments, and add mistakes by clicking the corresponding buttons:

- View in comparison — this link redirects you to the page with the comparison report:

When all the mistakes are added and classified, click "Complete evaluation", write an evaluation summary, and click the "Complete" button. The translator will get a notification.

- Note: If you press "Complete" and no mistakes are added to the report, the system will warn you:

Markup display

Markup display settings allow you to choose how tags will be displayed:

- "Full" — tags have original length, so you can see the data within:

- "Short" — the contents of the tags are not displayed and you see only their position in the text:

- "None" — tags are not displayed:

Units display

- "All" - units with and without mistakes are displayed:

- "With mistakes" - only units with mistakes are displayed:

- "Last commented by evaluator" — only units with the last comment left by the evaluator are displayed.

- "Last commented by translator" — only units with the last comment left by the translater are displayed.

- "Last commented by arbiter" — only units with the last comment left by the arbiter are displayed.

Reevaluation and arbitration requests

When the evaluation is done, the translator can complete the project or request the reevaluation if they disagree with mistake severities:

If the translator requests the reevaluation, the evaluator will have to reply to all the translator's comments.

- Note: Unless the number of maximum evaluation attempts has been adjusted, the translator can request the reevaluation for 2 times.

If there is no agreement between the translator and evaluator, the translator can request the arbitration:

The arbiter provides a final score that cannot be disputed and completes the project. Once the arbitration is completed, all the project participants will receive an email notification.