Evaluation report

Evaluation report page: http://cloud.tqauditor.com/evaluation/index?id=XXXX (replace XXXX with a valid number)

Contents

General information

After evaluator uploaded files, he can start evaluation:

You may configure evaluation process:

- Skip locked units - hide "frozen" units (for example, the client wants some parts, extremely important for him, stayed unchanged. Besides, extra units slow down editor’s work).

- Skip segments with match >= - fuzzy match percentage. The program will hide segments with match greater than or equal to, that you specified.

- Evaluation sample word count limit - quantity of words in edited segments, chosen for evaluation.

Press "Start evaluation".

Mistakes

You can Add mistake:

You may describe it:

You may also edit, delete mistake/comment:

Or add another mistake by pressing "Add mistake":

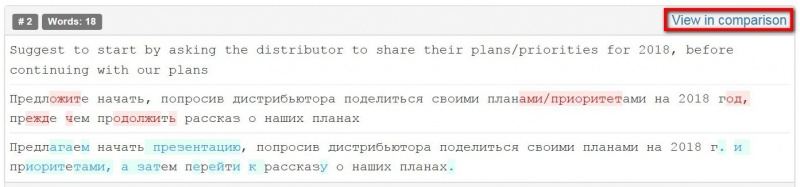

- View in comparison — this link redirects you on the page with Comparison report:

When the mistakes classification is done, the project evaluator has to press "Complete evaluation":

The evaluator may describe translation in general or give advice to the translator and press the "Complete" button:

Buttons and filters

At the left side of the screen, different buttons and filters are displayed:

- Complete evaluation - the button, that finishes evaluation process.

- Evaluation report - evaluation report view.

- Delete evaluation report - deletes the evaluation report.

- Comparison report - comparison report view.

- Project details - basic information about the project.

- Project files - original translation and amended translation.

Markup display

Markup display option defines tags display:

- Full - tags have original length, so you can see the data within:

- Short - tags compressed and you see only their position in the text:

- None – tags are totally hidden, so they will not distract you:

Units display

- All units - shows all text segments:

- With corrections - shows nothing but amended:

Evaluation and comparison details

Also, you may find here Evaluation and Comparison details, such as:

Evaluation sample details:

- Total units - the number of text segments in the sample.

- Total source words - the total quantity of words in the sample.

- Total mistakes - general quantity of mistakes.

Evaluation details:

- Skip locked units - hidden, "frozen" units (for example, the client wants some parts, extremely important for him, stayed unchanged. Besides, extra units slow down editor’s work).

- Skip segments with match >= - predefined fuzzy match percentage (the program hides segments with match greater than or equal to, that you specified).

- Total units - the total number of text segments.

- Corrected units - the number of segments with amendments.

- Total source words - the total number of words in the source.

- Source words in corrected units - the number of source words in amended segments.

- Quality score - the complex index of performing translation that depends on the total number of words, specialization, severity of mistakes, etc.

- Quality mark - the evaluation of translator based on quality score.

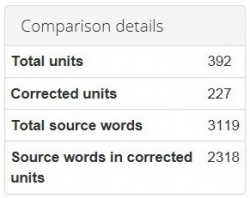

Comparison details

- Total units - the total number of segments.

- Corrected units - the number of segments with amendments.

- Total source words - the total number of words in the source.

- Source words in corrected units - the number of source words in amended segments.

When mistakes classification is done, the project evaluator has to press Complete evaluation => Complete,

and the system will send the quality assessment report to the translator.

When translator received this report and looked through classification of each mistake, he may Complete project

(if agree with translator; the project will be completed) or Request reevaluation (if disagree).

The project will be sent to the evaluator, who will review translator’s comments.

If they are convincing, the evaluator may change mistake severity. The translator will receive the reevaluated project.

The translator can send this project for reevaluation one more time.

If an agreement between the translator and evaluator wasn’t reached, translator can send the project to the arbiter

by pressing Request arbitration (it appears instead of Request reevaluation).