Difference between revisions of "Quality score formula"

(→TQAuditor v3.0) |

(→How Quality Score is calculated?) |

||

| Line 29: | Line 29: | ||

-- | -- | ||

| − | account.score_limit | + | '''account.score_limit'''-is equal to 100 by default, but you may define the highest score limit you need in the '''<U>[[Evaluation settings]]</U>'''. |

| + | |||

project.evaluation_total_word_count >> автоматич подсчет или ввод вручную, без разницы | project.evaluation_total_word_count >> автоматич подсчет или ввод вручную, без разницы | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=='''TQAuditor v2.14'''== | =='''TQAuditor v2.14'''== | ||

Revision as of 11:01, 28 January 2020

Contents

TQAuditor v3.0

How Quality Score is calculated?

The quality score reflects the number of mistakes made per 1000 words of the translated text.

The formula is:

ROUND(GREATEST(account.score_limit - SUM(mistake_severity.score * mistake_type_spec.weight) / project.evaluation_total_word_count * 1000, 0), 2)

The formula interpretation:

ROUND($var1, 2)-returns rounded to two decimal places value

ROUND(GREATEST($var2, 0), 2)-returns "0" in case of a negative value (example: if "-5" then "0").

Variables interpretation:

$var1 = GREATEST($main2, 0)

$var2 = account.score_limit - SUM(mistake_severity.score * mistake_type_spec.weight) / project.evaluation_total_word_count * 1000

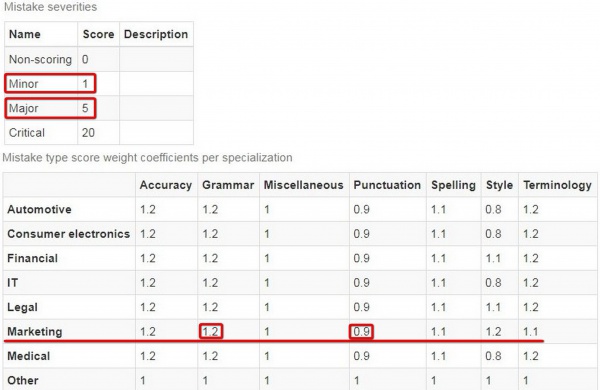

- mistake_severity.score - the mistake severity score.

- mistake_type_spec.weight - the weight coefficient of the mistake type per project specialization.

- project.evaluation_total_word_count - total source words after evaluation start filter is applied.

--

account.score_limit-is equal to 100 by default, but you may define the highest score limit you need in the Evaluation settings.

project.evaluation_total_word_count >> автоматич подсчет или ввод вручную, без разницы

TQAuditor v2.14

How Quality Score is calculated?

Quality score reflects the number of mistakes made per 1000 words of translated text.

The formula is:

Quality score = Σ(mistake_severity.score * mistake_type_spec.weight) * project.evaluation_corrected_word_count / project.evaluation_sample_word_count / project.evaluation_total_word_count * 1000, where:

- Quality score - the number of "base" mistakes per 1000 words ("base" mistake - the mistake that has the severity score of 1 and the weight coefficient of 1).

- Σ - the sum of products of the mistake severity score and the weight coefficient of the mistake type per specialization.

- mistake_severity.score - the mistake severity score.

- mistake_type_spec.weight - the weight coefficient of the mistake type per project specialization.

- project.evaluation_corrected_word_count - source words in corrected units in the evaluation sample.

- project.evaluation_sample_word_count - total source words in the evaluation sample.

- project.evaluation_total_word_count - total source words after evaluation start filter is applied.

How the number of translated words is selected?

To make it simpler, let’s make an example:

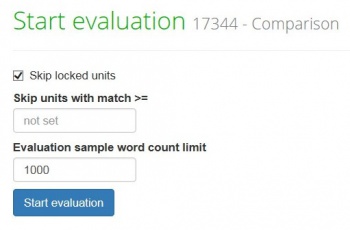

Before starting the evaluation, the reviewer selects the number of words to evaluate:

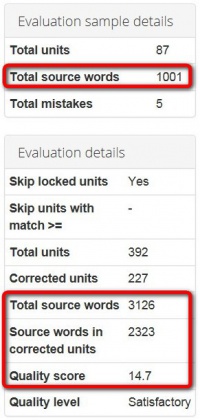

Now imagine that the whole translated text (marketing specialization) contains 3126 words, and the editor corrects the segments containing 2323 source words.

You select 1000 words sample which is randomly taken by the system out of 2323 source words in corrected units and add 5 mistakes of different types and severities. Two of them are minor punctuation mistakes

(the severity score of 1 and the weight coefficient of 0.9 for every mistake), and three of them are major grammar mistakes (the severity score of 5 and the weight coefficient of 1.2 for every mistake):

Note: By default, the system has pre-defined quality standards, but you can define your own corporate quality standards.

As a result, we get:

Quality score = Σ(1*0.9+1*0.9+5*1,2+5*1,2+5*1,2)*2323/3126/1001*1000=14,699

You’ll be able to see these numbers on the evaluation page:

Where:

- Quality score = 14,7.

- project.evaluation_corrected_word_count = 2323.

- project.evaluation_sample_word_count = 3126.

- project.evaluation_total_word_count = 1001.

Why is it made that way?

We came to this through several stages of evolution.

First, the system was selecting just the beginning of the text, and we found out that the translators started to translate first 1000 words better than the rest of the text. So we decided to select the random part of the text. But then the system sometimes selected the pieces containing no corrections while skipping heavily corrected parts. So we changed the logic, and now the system returns the required number of corrections to the evaluator, but remembers how much text it took to find these corrections.

How Quality Score is calculated for several projects?

In evaluating the quality score of several projects ( when generating reports), the corresponding projects indicators are summarized and calculated according to the formula:

Average quality score = Σ(Σ(A)) * Σ(B) / Σ(C) / Σ(D) * 1000, where:

- Average quality score - the number of "base" mistakes per 1000 words in evaluated projects ("base" mistake - the mistake that has the severity score of 1 and the weight coefficient of 1).

- Σ(Σ(A)) - the total sum of products of the mistake severity scores and the weight coefficients of the mistake types per specialization of evaluated projects.

- Σ(B) - the sum of source words in corrected units in the evaluation samples of evaluated projects.

- Σ(C) - the sum of total source words in the evaluation samples of evaluated projects.

- Σ(D) - the sum of total source words in evaluated projects.

To put it simply, let's calculate the quality score of two projects:

| Project A | Project B | |

| Σ(A) - the sum of products of the mistake severity scores and the weight coefficients of the mistake types per specialization | 10 | 2,01 |

| B - source words in corrected units in the evaluation sample | 428 | 8956 |

| C - source words in the evaluation sample | 428 | 308 |

| D - total source words in the project | 1489 | 9979 |

| Quality score | 6,72 | 5,86 |

| Quality level | Good | Good |

As the result, we get:

Average quality score = (10+2,01) * (428+8956) / (428+308) / (1489+9979) * 1000=13,35 (which corresponds to the "Satisfactory" quality level).

As you can see in the above example, the average quality score and average quality level could differ from the quality score and quality level of individual projects when the system computes summarized data of several projects.