Difference between revisions of "Quality score formula"

From TQAuditor Wiki

(→TQAuditor v3.0) |

(→Quality score calculation examples) |

||

| (88 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | =='''Quality score formula visualisation and interpretation'''== | ||

| − | + | [[File:QSF.png|border|1000px]] | |

| − | |||

| − | The | + | ;1. Account score limit |

| + | :The highest possible score (100 by default). Can be set on this page: [[https://cloud.tqauditor.com/system/evaluation-settings Evaluation settings]] | ||

| + | ;2. Mistake severity score | ||

| + | :Score of mistake by its severity (for example, 1 point for minor and 5 points for major mistakes). Can be set on this page: [[https://cloud.tqauditor.com/mistake-severity/index Mistake severities list]] | ||

| + | ;3. Mistake type weight | ||

| + | :Weight of a specific mistake type by specialization. Can be adjusted on this page [[https://cloud.tqauditor.com/mistake-type/index Mistake types list]] | ||

| + | ;4. SUM | ||

| + | :A sum of products of #2 and #3 for all the mistakes. | ||

| + | ;5. Project evaluation word count | ||

| + | :''Automatic word count'': The value from the "Total source words" field in the "Evaluation details" section (not the Total source words of a file). Please see the [[Evaluation_report#Automatic_vs._manual_word_count|Automatic vs. manual word count]] page. | ||

| + | :''Manual word count'': The value from the "Evaluated source words" field (specified when starting the evaluation with a manual word count). | ||

| + | ;6. 1000 | ||

| + | :In the end, the result is always converted to 1000, a most optimal value. | ||

| + | The mistake severity scores, mistake type weights, and reports are based on this 1000-word amount. For example, a critical mistake with score of 20 with weight coefficient of 1 reduces the quality score by 20 per 1000 words (by 10 per 2000 words, accordingly, and so on). | ||

| − | |||

| − | + | For more information, please check the [[Quality score formula:Details and versions|Quality score formula:Details and versions]] page. | |

| − | + | =='''Quality score calculation examples'''== | |

| − | + | In the examples below, the following quality standard is used: | |

| − | + | [[File:QS.png|border|300px]] | |

| − | + | [[File:QS1.png|border|1000px]] | |

| − | + | ===Automatic word count=== | |

| + | <br /> | ||

| + | ;File details: | ||

| + | :Total source words: 3126 | ||

| + | :Fully reviewed: yes | ||

| + | :Number of units (segments): 392 | ||

| + | :Project specialization - marketing | ||

| − | + | ;Evaluation settings: | |

| + | [[File:EAS.png|border|300px]] | ||

| − | + | ;Evaluation details: | |

| + | :Total source words: 1749 | ||

| + | :Source words in corrected units: 1002 | ||

| + | |||

| + | '''Mistakes:''' | ||

| − | + | # <span style="color:red">'''Major'''</span> ('''<span style="color:blue">3</span>''' points each): | |

| + | ## Terminology: '''1''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | ## Grammar: '''1''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | # <span style="color:orange"> '''Minor'''</span> ('''<span style="color:blue">1</span>''' point each): | ||

| + | ## Grammar: '''3''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | ## Functional: '''2''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | # <span style="color:green"> '''Repetitive'''</span> ('''<span style="color:blue">0.01</span>''' point each): | ||

| + | ## Functional: '''6''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | ## Layout: '''8''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | # <span style="color:#6C3483"> '''Non-scoring'''</span> ('''<span style="color:blue">0</span>''' points each): | ||

| + | ## Style: '''5''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | ## Terminology: '''3''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| − | *''' | + | :'''Total mistakes''': '''29''' |

| + | :'''SUM''': '''1'''*'''<span style="color:blue">3</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''1'''*'''<span style="color:blue">3</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''3'''*'''<span style="color:blue">1</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''2'''*'''<span style="color:blue">1</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''6'''*'''<span style="color:blue">0.01</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''8'''*'''<span style="color:blue">0.01</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''5'''*'''<span style="color:blue">0</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''3'''*'''<span style="color:blue">0</span>'''*'''<span style="color:DarkTurquoise">1</span>'''=3+3.6+3.6+2+0.06+0.08+0+0='''12.34''' | ||

| − | |||

| − | + | ;Quality score calculation: | |

| − | |||

| + | Using the [[Quality_score_formula#Quality_score_formula_visualisation_and_interpretation|formula]]: | ||

| + | 100-(12.34/1749*1000)=92.94 | ||

| − | + | [[File:QSA.png|border|300px]] | |

| + | ---- | ||

| + | ===Manual word count=== | ||

| + | <br /> | ||

| + | ;File details: | ||

| + | :Total source words: 3126 | ||

| + | :Fully reviewed: no | ||

| + | :Number of words in a reviewed part of the file: around 1500 | ||

| + | :Number of units (segments): 392 | ||

| + | :Project specialization - marketing | ||

| + | ;Evaluation settings: | ||

| + | :Evaluated source words: 1500<br /> | ||

| + | ;Evaluation details: | ||

| + | :Corrected units: 227 | ||

| + | :Total source words: 1500 | ||

| + | |||

| + | '''Mistakes:''' | ||

| + | # <span style="color:red">'''Major'''</span> ('''<span style="color:blue">3</span>''' points each): | ||

| + | ## Terminology: '''1''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | ## Grammar: '''1''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | # <span style="color:orange"> '''Minor'''</span> ('''<span style="color:blue">1</span>''' point each): | ||

| + | ## Grammar: '''3''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | ## Functional: '''2''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | # <span style="color:green"> '''Repetitive'''</span> ('''<span style="color:blue">0.01</span>''' point each): | ||

| + | ## Functional: '''6''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | ## Layout: '''8''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| + | # <span style="color:#6C3483"> '''Non-scoring'''</span> ('''<span style="color:blue">0</span>''' points each): | ||

| + | ## Style: '''5''' (weight coefficient '''<span style="color:DarkTurquoise">1.2</span>''') | ||

| + | ## Terminology: '''3''' (weight coefficient '''<span style="color:DarkTurquoise">1</span>''') | ||

| − | + | :'''Total mistakes''': '''29''' | |

| − | + | :'''SUM''': '''1'''*'''<span style="color:blue">3</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''1'''*'''<span style="color:blue">3</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''3'''*'''<span style="color:blue">1</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''2'''*'''<span style="color:blue">1</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''6'''*'''<span style="color:blue">0.01</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''8'''*'''<span style="color:blue">0.01</span>'''*'''<span style="color:DarkTurquoise">1</span>'''+'''5'''*'''<span style="color:blue">0</span>'''*'''<span style="color:DarkTurquoise">1.2</span>'''+'''3'''*'''<span style="color:blue">0</span>'''*'''<span style="color:DarkTurquoise">1</span>'''=3+3.6+3.6+2+0.06+0.08+0+0='''12.34''' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | = | + | ;Quality score calculation: |

| + | Using the [[Quality_score_formula#Quality_score_formula_visualisation_and_interpretation|formula]]: | ||

| + | 100-(12.34/1500*1000)=91.77 | ||

| − | + | [[File:QSM.png|border|300px]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | [[File: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 15:20, 28 January 2022

Contents

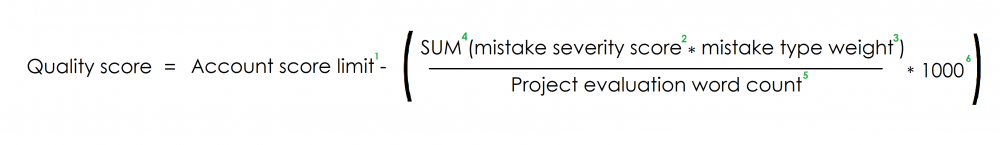

Quality score formula visualisation and interpretation

- 1. Account score limit

- The highest possible score (100 by default). Can be set on this page: [Evaluation settings]

- 2. Mistake severity score

- Score of mistake by its severity (for example, 1 point for minor and 5 points for major mistakes). Can be set on this page: [Mistake severities list]

- 3. Mistake type weight

- Weight of a specific mistake type by specialization. Can be adjusted on this page [Mistake types list]

- 4. SUM

- A sum of products of #2 and #3 for all the mistakes.

- 5. Project evaluation word count

- Automatic word count: The value from the "Total source words" field in the "Evaluation details" section (not the Total source words of a file). Please see the Automatic vs. manual word count page.

- Manual word count: The value from the "Evaluated source words" field (specified when starting the evaluation with a manual word count).

- 6. 1000

- In the end, the result is always converted to 1000, a most optimal value.

The mistake severity scores, mistake type weights, and reports are based on this 1000-word amount. For example, a critical mistake with score of 20 with weight coefficient of 1 reduces the quality score by 20 per 1000 words (by 10 per 2000 words, accordingly, and so on).

For more information, please check the Quality score formula:Details and versions page.

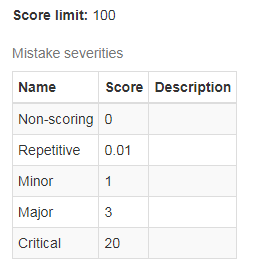

Quality score calculation examples

In the examples below, the following quality standard is used:

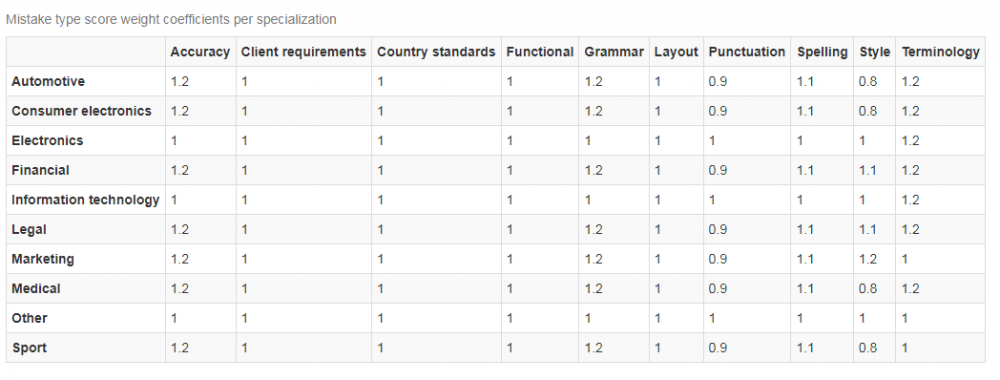

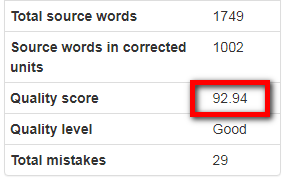

Automatic word count

- File details:

- Total source words: 3126

- Fully reviewed: yes

- Number of units (segments): 392

- Project specialization - marketing

- Evaluation settings:

- Evaluation details:

- Total source words: 1749

- Source words in corrected units: 1002

Mistakes:

- Major (3 points each):

- Terminology: 1 (weight coefficient 1)

- Grammar: 1 (weight coefficient 1.2)

- Minor (1 point each):

- Grammar: 3 (weight coefficient 1.2)

- Functional: 2 (weight coefficient 1)

- Repetitive (0.01 point each):

- Functional: 6 (weight coefficient 1)

- Layout: 8 (weight coefficient 1)

- Non-scoring (0 points each):

- Style: 5 (weight coefficient 1.2)

- Terminology: 3 (weight coefficient 1)

- Total mistakes: 29

- SUM: 1*3*1+1*3*1.2+3*1*1.2+2*1*1+6*0.01*1+8*0.01*1+5*0*1.2+3*0*1=3+3.6+3.6+2+0.06+0.08+0+0=12.34

- Quality score calculation:

Using the formula: 100-(12.34/1749*1000)=92.94

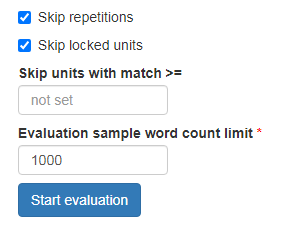

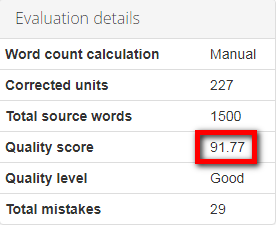

Manual word count

- File details:

- Total source words: 3126

- Fully reviewed: no

- Number of words in a reviewed part of the file: around 1500

- Number of units (segments): 392

- Project specialization - marketing

- Evaluation settings:

- Evaluated source words: 1500

- Evaluation details:

- Corrected units: 227

- Total source words: 1500

Mistakes:

- Major (3 points each):

- Terminology: 1 (weight coefficient 1)

- Grammar: 1 (weight coefficient 1.2)

- Minor (1 point each):

- Grammar: 3 (weight coefficient 1.2)

- Functional: 2 (weight coefficient 1)

- Repetitive (0.01 point each):

- Functional: 6 (weight coefficient 1)

- Layout: 8 (weight coefficient 1)

- Non-scoring (0 points each):

- Style: 5 (weight coefficient 1.2)

- Terminology: 3 (weight coefficient 1)

- Total mistakes: 29

- SUM: 1*3*1+1*3*1.2+3*1*1.2+2*1*1+6*0.01*1+8*0.01*1+5*0*1.2+3*0*1=3+3.6+3.6+2+0.06+0.08+0+0=12.34

- Quality score calculation:

Using the formula: 100-(12.34/1500*1000)=91.77